import numpy as np

import cv2

import cv2.aruco as aruco

import matplotlib.pyplot as plt

from scipy.spatial.transform import Rotation as Rot

plt.rcParams['figure.figsize'] = [10,10]

np.set_printoptions(precision=2, suppress=True)Introduction

In a previous post, I’ve shown how to determine the position and orientation of a camera using an ArUco code.

However, when we have multiple ArUco tags, we might want to find the relationships between them.

This will require us to work with different coordinate frames and transform coordinates from one frame to another.

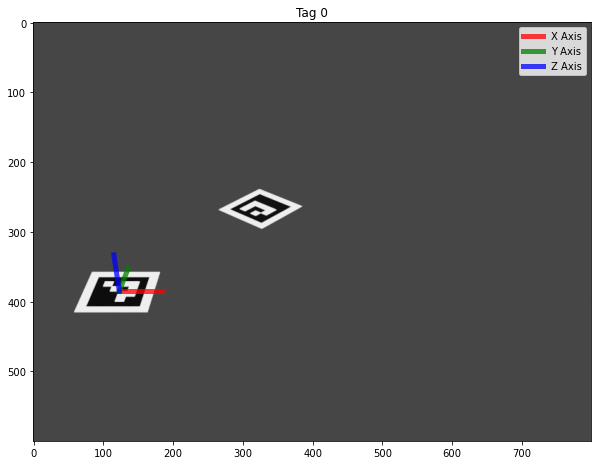

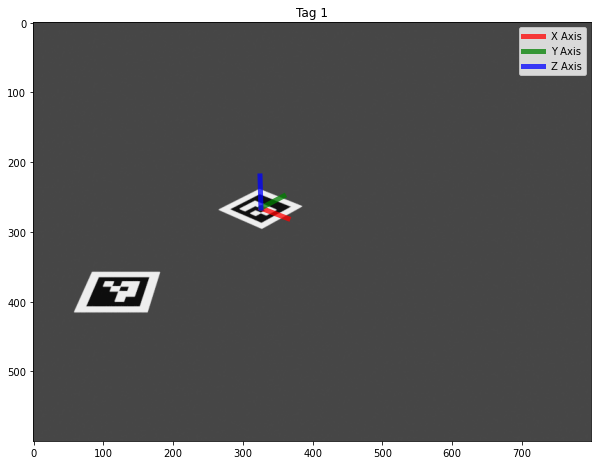

I’ve created a synthetic example, as below.

It consists of 2 tags, with the ArUco indexes of 0 and 1.

Tag 0 still needs to be translated.

Tag 1 has undergone two transformations.

- Translation by [3, 4, 0].

- Rotation by 37 degrees around its Z-axis.

The camera itself is located at [5, -14, 10].

Let’s now detect the ArUco tags in the image and then work out the position and orientation of the camera according to each of the tag’s coordinate systems.

Changing Coordinate Frames

From the above, we can see two different coordinate systems, one per tag.

Let’s take a bird’s eye view of the situation:

Previously, we found the rotation and the camera’s position in the coordinate frame of each tag.

R_0, C_0 = tags[0]

print(R_0)

print(C_0)[[ 1. -0. -0. ]

[ 0. -0.49 0.87]

[-0. -0.87 -0.49]]

[[ 4.61]

[-15.17]

[ 10.25]]R_1, C_1 = tags[1]

print(R_1)

print(C_1)[[ 0.78 0.3 -0.54]

[ 0.62 -0.37 0.69]

[ 0.01 -0.88 -0.48]]

[[ 13.2 ]

[-14.46]

[ 10. ]]

Given the information we have, what if we want to find the position and orientation of Tag 1 with respect to Tag 0’s coordinate frame?

Working with all of our measurements in a single frame will provide us with a coherent view of our world.

Fortunately, this is relatively easy.

We can first find the relative rotation between the two tags.

#Rotatation of Tag 1 with respect to Tag 0

R_delta = np.dot(R_0, R_1.T)Once we have done this, we can transform C_1 into Tag 0’s coordinate system and then subtract it from C_0, forming the vector T_1

#Position of the tag with respect to the origin

T_1 = C_0 - np.dot(R_delta,C_1)array([[ 3.08],

[ 4.21],

[-0.02]])We can see that Tag 1 is located at [3.08, 4.21, -0.02]. The tag is located at [3, 4, 0]. However, there is some noise inherent in the process.

Tag 1 was also rotated by an angle (37 degrees) around its Z-axis. We can measure this angle as follows:

print(np.degrees(np.arccos(R_delta[1,1])))38.19945877152531As before, there is a slight error between what we have measured and reality.

Conclusion

We can see how we can transform measurements from two different tags into a single coordinate frame. In another post, I will look at what happens when we have more photos and end up with potentially contradictory measurements due to the measurement noise we will invariably encounter.